Benchmarking#

Iris includes architecture for benchmarking performance and other metrics of interest. This is done using the Airspeed Velocity (ASV) package.

Full detail on the setup and how to run or write benchmarks is in benchmarks/README.md in the Iris repository.

Continuous Integration#

The primary purpose of Airspeed Velocity, and Iris’ specific benchmarking setup, is to monitor for performance changes using statistical comparison between commits, and this forms part of Iris’ continuous integration.

Accurately assessing performance takes longer than functionality pass/fail tests, so the benchmark suite is not automatically run against open pull requests, instead it is run overnight against each the commits of the previous day to check if any commit has introduced performance shifts. Detected shifts are reported in a new Iris GitHub issue.

If a pull request author/reviewer suspects their changes may cause performance

shifts, they can manually order their pull request to be benchmarked by adding

the benchmark_this label to the PR. Read more in benchmarks/README.md.

Other Uses#

Even when not statistically comparing commits, ASV’s accurate execution time results - recorded using a sophisticated system of repeats - have other applications.

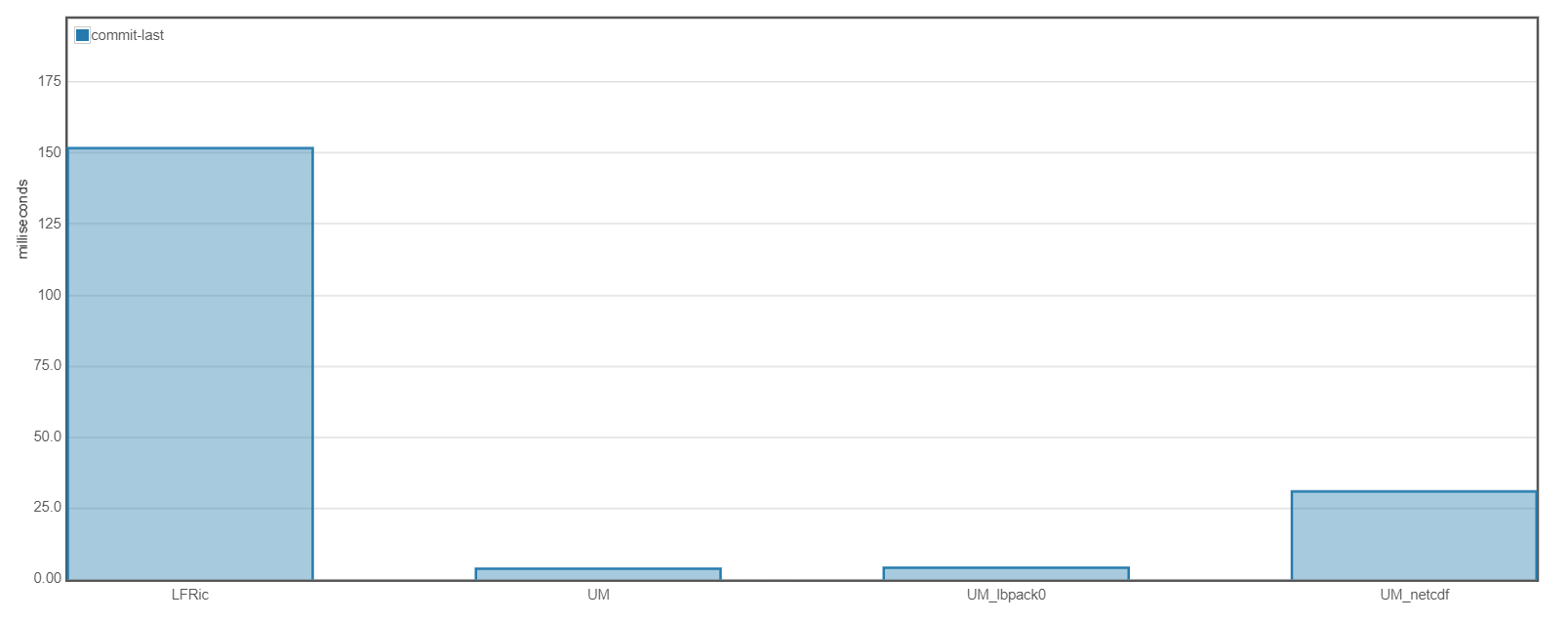

Absolute numbers can be interpreted providing they are recorded on a dedicated resource.

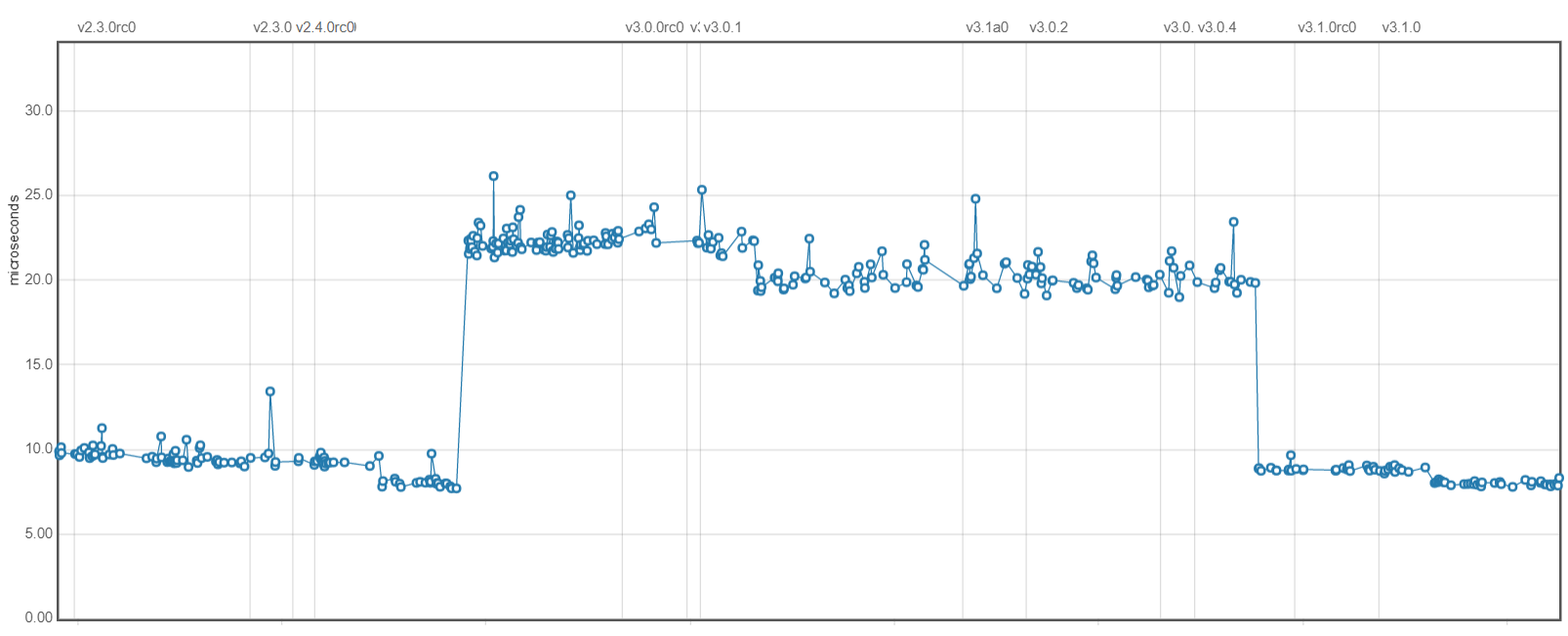

Results for a series of commits can be visualised for an intuitive understanding of when and why changes occurred.

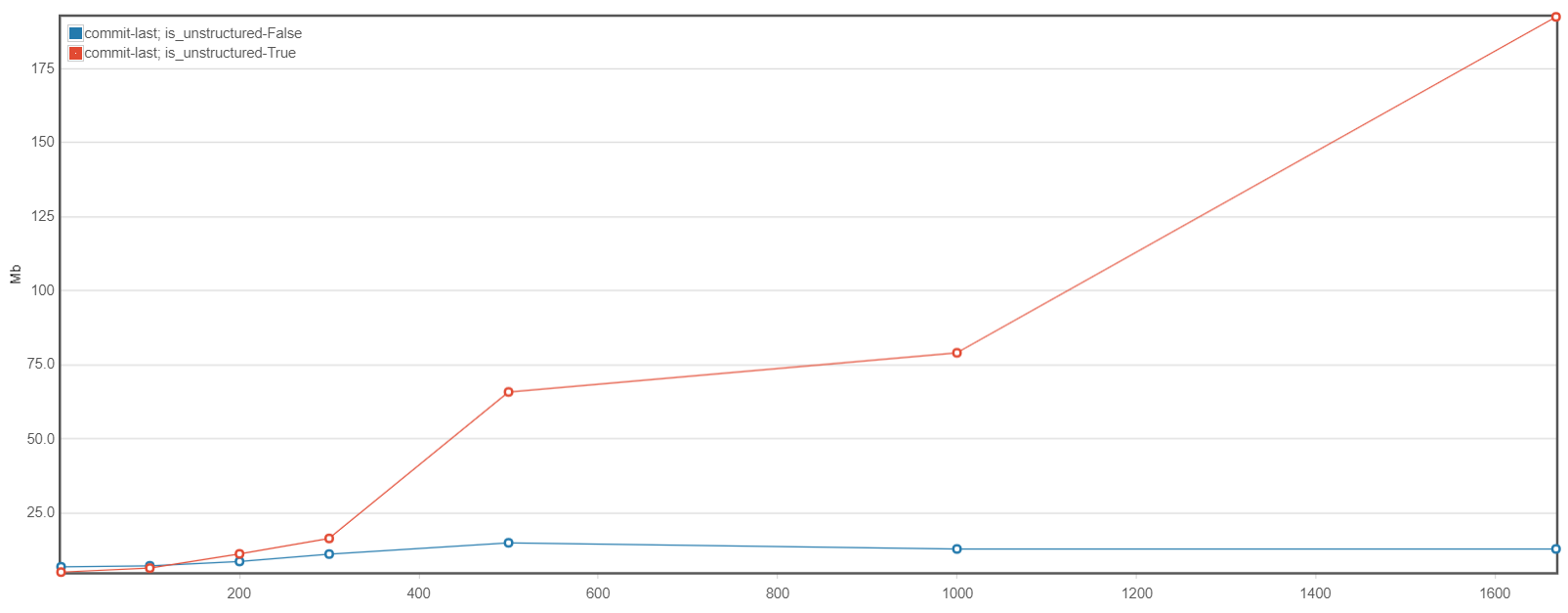

Parameterised benchmarks make it easy to visualise:

This also isn’t limited to execution times. ASV can also measure memory demand, and even arbitrary numbers (e.g. file size, regridding accuracy), although without the repetition logic that execution timing has.

-3.10.0.dev23-gold?style=flat)